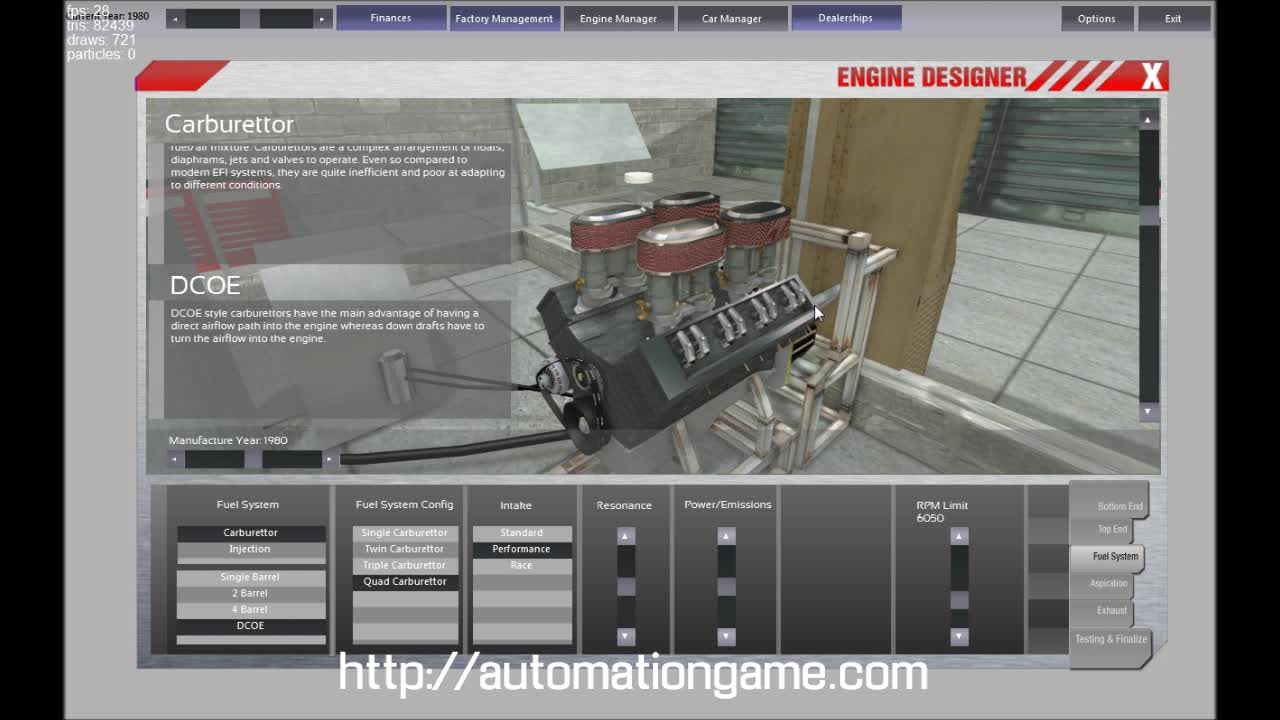

In modern game UIs, this information is high-contrast, clear and unobscured, and typically located in predictable, fixed locations on the screen at all times. These are the same visual cues that traditionally inform the game’s player what is happening in the game. In principle, it’s not so different from how self-driving cars operate, but instead of using cameras to read nearby road signs and traffic lights, the tool captures the gamer’s screen and recognizes indicators in the game’s user interface that communicate important events happening in-game: kills and deaths, goals and saves, wins and losses. Backed by $2.5 million of venture capital funding and an impressive team of Silicon Valley Big Tech alumni, Athenascope developed a computer vision system to identify highlight clips within longer recordings. Many of these approaches follow a classic computer science hardware-versus-software dichotomy.Īthenascope was one of the first companies to execute on this concept at scale. Differences in their approaches to solving this problem are what differentiate competing solutions from each other.

Several startups compete to dominate this emerging niche.

Computer vision analysis of game UIĪn emerging solution is to use automated tools to identify key moments in a longer broadcast. There aren’t enough minutes in the day to carefully review all the footage on top of other life and work priorities. At the top of the food chain, the largest streamers can hire teams of video editors and social media managers to tackle this part of the job, but growing and part-time streamers struggle to find the time to do this themselves or come up with the money to outsource it. Maintaining a constant presence on social media and YouTube fuels the growth of the stream channel and attracts more viewers to catch a stream live, where they may purchase monthly subscriptions, donate and watch ads.ĭistilling the most impactful five to 10 minutes of content out of eight or more hours of raw video becomes a non-trivial time commitment. However, these hours in front of the camera and keyboard are only half of the streaming grind. In a bid to capture valuable viewer attention, 24-hour marathon streams are not uncommon either. The online streaming game is a grind, with full-time creators putting in eight- if not 12-hour performances on a daily basis. The AnimationEvent object has member variables that allow a float, string, integer and object reference to be passed into the function all at once, along with other information about the Event that triggered the function call.The largest streamers hire teams of video editors and social media managers, but growing and part-time streamers struggle to do this themselves or come up with the money to outsource it. The parameter can be a float, string, int, or object reference, or an AnimationEvent object. The function called by an Animation Event also has the option to take one parameter. More info See in Glossary by using Animation Events, which allow you to call functions in the object’s script at specified points in the timeline. It is a simple “unit” piece of motion, such as (one specific instance of) “Idle”, “Walk” or “Run”. You can increase the usefulness of Animation clips Animation data that can be used for animated characters or simple animations.

0 kommentar(er)

0 kommentar(er)